When evaluating a scientific camera, technical specifications can be overwhelming — pixel size, quantum efficiency, dynamic range, and more. Among these specs, bit depth is one of the most critical for determining how much information your camera can capture and how faithfully it represents fine details.

In scientific imaging, where subtle variations in brightness can represent important data, understanding bit depth isn't optional — it’s essential.

This article explains what bit depth is, how it affects image quality, its role in data accuracy, and how to choose the right bit depth for your application.

Bit Depth: The Maximum Gray Level Count in an Image Pixel

When working with a scientific camera, bit depth defines how many distinct intensity values each pixel can record. This is crucial because in scientific imaging, each pixel’s value may correspond directly to a measured quantity, such as photon count or fluorescence intensity.

The bit depth shows the number of 'bits' of binary digital data that each pixel uses for storing intensity values, where 8 bits form one byte. The maximum gray level value is given by:

Maximum gray levels = 2^(Bit depth)

For example:

● 8-bit = 256 levels

● 12-bit = 4,096 levels

● 16-bit = 65,536 levels

More gray levels allow for finer brightness gradations and more accurate representation of subtle differences, which can be critical when measuring faint signals or performing quantitative analysis.

Bit depth and speed

Increasing bit depth means that the analogue-to-digital converters (ADCs) must output more bits per measurement. This usually requires them to reduce their measurements per second – i.e., to reduce camera frame rate.

For this reason, many scientific cameras offer two acquisition modes:

● High bit depth mode – This typically offers higher dynamic range. Prioritizes tonal resolution and dynamic range for applications like fluorescence microscopy or spectroscopy.

● High-speed mode – This reduces bit depth in favor of faster frame rates, which is essential for fast events in high-speed imaging.

Knowing this trade-off helps you select the mode that aligns with your imaging goals — precision vs. temporal resolution.

Bit depth and dynamic range

It’s common to confuse bit depth with dynamic range, but they are not identical. Bit depth defines the number of possible brightness levels, while dynamic range describes the ratio between the faintest and brightest detectable signals.

The relationship between the two depends on additional factors such as camera gain settings and readout noise. In fact, dynamic range can be expressed in “effective bits,” meaning that noise performance may reduce the number of bits that contribute to usable image data.

For camera selection, this means you should evaluate both bit depth and dynamic range together rather than assuming one fully defines the other.

Data storage

The bytes of data storage required per camera frame (without compression) can be calculated as:

Additionally, some file formats — like TIFF — store 9- to 16-bit data inside a 16-bit "wrapper". This means that even if your image only uses 12 bits, the storage footprint may be the same as a full 16-bit image.

For labs handling large datasets, this has practical implications: higher bit depth images demand more disk space, longer transfer times, and more computing power for processing. Balancing precision needs with data management capacity is essential for an efficient workflow.

How Bit Depth Affects Image Quality

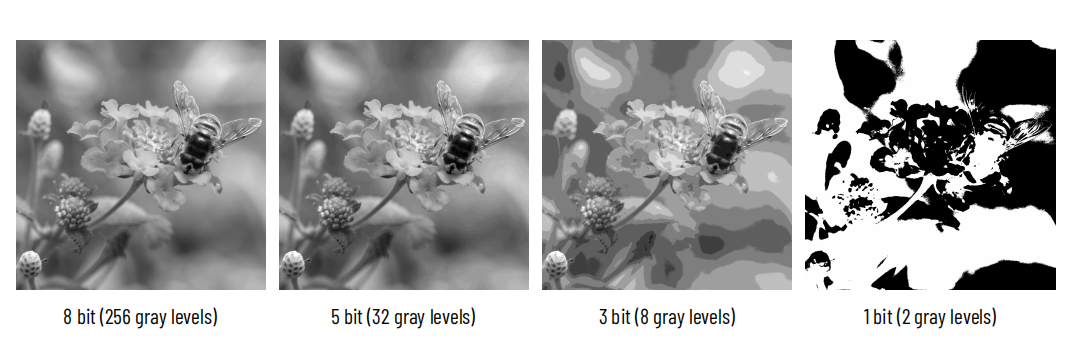

Bit depth Examples: Illustration of the concept of bit depth. Reducing bit depth reduces the number of intensity steps that can be used to display the image.

Bit depth has a direct impact on several aspects of image quality in a scientific camera.

Dynamic Range

Higher bit depth captures more brightness levels, preserving detail in shadows and highlights.

For example, in fluorescence microscopy, dim features might be barely visible in an 8-bit image but are more distinct in a 16-bit capture.

Smoother Tonal Gradations

Higher bit depths allow smoother transitions between brightness levels, avoiding “banding” in gradients. This is especially important in quantitative analysis, where abrupt jumps can distort results.

Signal-to-Noise Ratio (SNR) Representation

While bit depth does not directly increase a sensor’s SNR, it enables the camera to more accurately represent subtle signal variations above the noise floor.

If the sensor’s SNR is lower than the resolution offered by the bit depth, those extra bits may not contribute to actual image quality — a factor to keep in mind.

Example:

● 8-bit image: Shadows merge, faint features vanish, and subtle changes are lost.

● 16-bit image: Gradations are continuous, faint structures are preserved, and quantitative measurements are more reliable.

Bit Depth and Data Accuracy in Scientific Imaging

In scientific imaging, an image isn’t just a picture — it’s data.

Each pixel’s value can correspond to a measurable quantity, such as photon count, fluorescence intensity, or spectral power.

Higher bit depth reduces quantization error — the rounding-off error that occurs when an analog signal is digitized into discrete levels. With more levels available, the digital value assigned to a pixel more closely matches the true analog signal.

Why this matters:

● In fluorescence microscopy, a one-step difference in brightness might represent a meaningful change in protein concentration.

● In astronomy, faint signals from distant stars or galaxies could be lost if the bit depth is too low.

● In spectroscopy, a higher bit depth ensures more precise measurements of absorption or emission lines.

A sCMOS camera with 16-bit output can record subtle differences that would be invisible in a lower bit-depth system, making it essential for applications requiring quantitative accuracy.

How Much Bit Depth Do You Need?

Many applications require both high signal levels and high dynamic range, in which case a high bit depth (14-bit, 16-bit or more) can be beneficial.

Usually with low light imaging, however, the available bit depth will provide far higher saturation intensities than will be reached in most cases. Particularly for 16-bit cameras, unless the gain is particularly high, the full 16-bit range is seldom necessary.

Higher-speed cameras or camera modes can be just 8-bit, which can be more limiting, though the higher speeds that 8-bit modes can enable often make the trade-off worthwhile. Camera manufacturers can increase the versatility of 8-bit modes to cope with the typical signal levels of different imaging applications through changeable gain settings.

Choosing the Right Bit Depth for Your Application

Here’s a quick reference for matching bit depth to common scientific imaging scenarios:

|

Application |

Recommended Bit Depth |

Reason |

|

Fluorescence Microscopy |

16-bit |

Detect faint signals and subtle intensity differences |

|

Astronomy Imaging |

14–16-bit |

Capture high dynamic range in low-light conditions |

|

Industrial Inspection |

12–14-bit |

Identify small defects with clarity |

|

General Documentation |

8-bit |

Sufficient for non-quantitative purposes |

|

Spectroscopy |

16-bit |

Preserve fine variations in spectral data |

Trade-offs:

● Higher bit depth = better tonal resolution and accuracy, but larger files and longer processing times.

● Lower bit depth = faster acquisition and smaller files, but risk of losing subtle details.

Bit Depth vs Other Camera Specs

While bit depth is important, it’s only one piece of the puzzle when choosing a scientific camera.

Sensor Type (CCD vs CMOS vs sCMOS)

Different sensor architectures have varying readout noise, dynamic range, and quantum efficiency. For example, a high-bit-depth sensor with poor quantum efficiency may still struggle in low-light imaging.

Quantum Efficiency (QE)

QE defines how efficiently a sensor converts photons into electrons. High QE is crucial for capturing weak signals, and when paired with sufficient bit depth, it maximizes data accuracy.

Dynamic Range

A camera’s dynamic range determines the span between the faintest and brightest signals it can capture simultaneously. Higher dynamic range is most beneficial when matched with a bit depth capable of representing those brightness levels.

Note:

A higher bit depth will not improve image quality if other system limitations (like noise or optics) are the real bottleneck.

For example, an 8-bit camera with very low noise could outperform a noisy 16-bit system in some applications.

Conclusion

In scientific imaging, bit depth is more than a technical specification — it’s a fundamental factor in capturing accurate, reliable data.

From detecting faint structures in microscopy to recording distant galaxies in astronomy, the right bit depth ensures that your scientific camera preserves the details and measurements your research depends on.

When selecting a camera:

1、Match bit depth to your application’s precision needs.

2、Consider it alongside other critical specs like quantum efficiency, noise, and dynamic range.

3、Remember that higher bit depth is most valuable when your system can take advantage of it.

If you’re looking for a CMOS camera or sCMOS camera designed for high-bit-depth scientific imaging, explore our range of models engineered for precision, reliability, and data accuracy.

FAQs

What’s the practical difference between 12-bit, 14-bit, and 16-bit in scientific imaging?

In practical terms, the jump from 12-bit (4,096 levels) to 14-bit (16,384 levels) and then to 16-bit (65,536 levels) allows for progressively finer discrimination between brightness values.

● 12-bit is sufficient for many industrial and documentation applications where lighting is well controlled.

● 14-bit offers a good balance of precision and manageable file size, ideal for most laboratory workflows.

● 16-bit excels in low-light, high-dynamic-range scenarios such as fluorescence microscopy or astronomical imaging, where the ability to record faint signals without losing bright details is crucial.

However, remember that the camera’s sensor noise and dynamic range must be good enough to utilize those extra tonal steps — otherwise, the benefits may not be realized.

Does higher bit depth always result in better images?

Not automatically. Bit depth determines potential tonal resolution, but actual image quality depends on other factors, including:

● Sensor sensitivity (quantum efficiency)

● Readout noise

● Optics quality

● Illumination stability

For example, a high-noise 16-bit CMOS camera might capture no more useful detail than a low-noise 12-bit sCMOS camera in certain conditions. In other words, higher bit depth is most beneficial when paired with a well-optimized imaging system.

Can I downsample from a high-bit-depth image without losing important data?

Yes — in fact, this is a common practice. Capturing at a higher bit depth gives you flexibility for post-processing and quantitative analysis. You can later downsample to 8-bit for presentation or archiving, retaining the analysis results without keeping the full dataset. Just make sure the original high-bit-depth files are stored somewhere if re-analysis might be needed.

What role does bit depth play in quantitative scientific measurements?

In quantitative imaging, bit depth directly influences how accurately pixel values represent real-world signal intensities. This is vital for:

● Microscopy – Measuring fluorescence intensity changes at the cellular level.

● Spectroscopy – Detecting subtle shifts in absorption/emission lines.

● Astronomy – Recording faint light sources over long exposures.

In these cases, insufficient bit depth can cause rounding errors or signal clipping, leading to inaccurate data interpretation.

Want to learn more? Take a look at related articles:

[Dynamic Range] – What is Dynamic Range?

Quantum Efficiency in Scientific Cameras: A Beginner’s Guide

Tucsen Photonics Co., Ltd. All rights reserved. When citing, please acknowledge the source: www.tucsen.com

2025/09/30

2025/09/30